Professor Daniel Goldmark (Music, CAS) has access to a treasure trove of content for his Popular Music Studies classes but no effective way to take it all in at once. At the Library of Congress alone, there are tens of thousands of digitized music cover artworks spanning centuries. These files, along with various physical collections of cover art scattered across museums and private catalogs, serve as touchstones for significant historical movements, societal shifts, and cultural developments. He sought a means to explore these trends both up close and at scale—to examine one image in detail while also considering vast amounts of content simultaneously—and XR seemed like the perfect place to start.

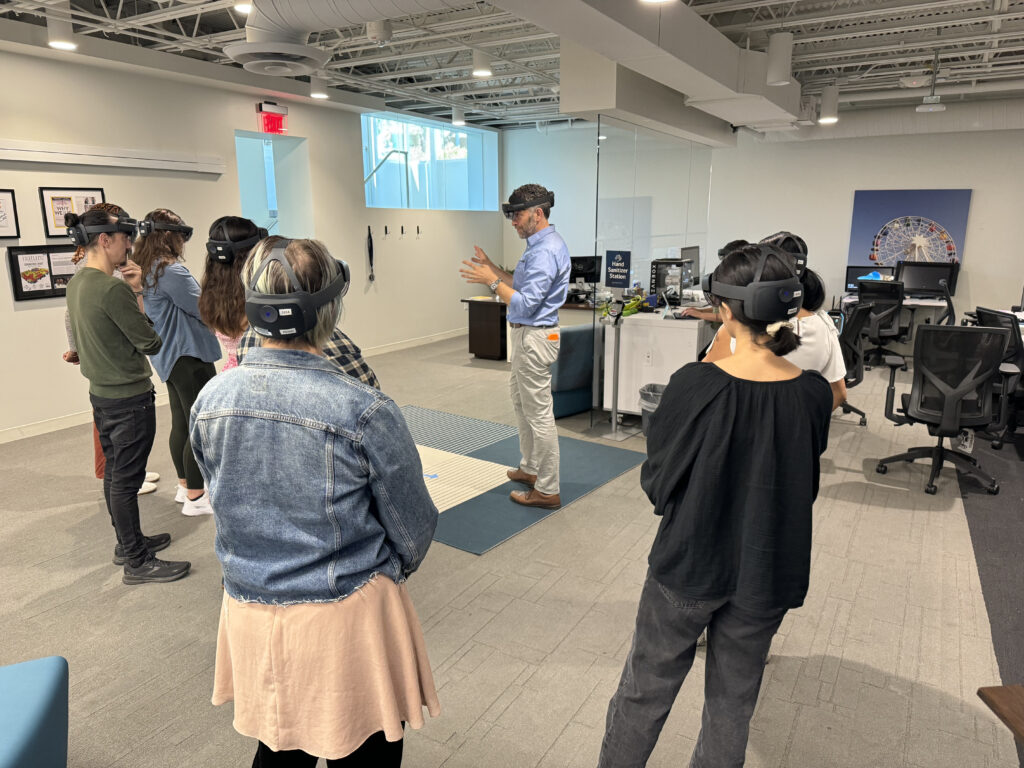

During his fellowship at the IC, Professor Goldmark created LightBox, a data visualization tool that allows users to arrange, query, sort, and tag digital content in 3D.

Students upload a curated .csv or spreadsheet containing details of each piece, typically including the title, author, date, and a link to the digital art file. They can modify the arrangement and size of the display and are able to examine individual pieces or entire groups simultaneously. Notably, students actively perform research by “live tagging” the displayed images, with those tags saved back to the original spreadsheet. This application provides an entirely new way to approach data synthesis and research, enabling users to parse and analyze trends across fields—from biomedical patents to de-identified biometric data from patients. Going forward, Professor Goldmark is exploring ways to enrich the experience further with audio and AI.